The modern gaming industry has evolved into a dynamic blend of art, science, and innovation, with graphics and rendering technologies playing a critical role in shaping player experiences. The visual realism, lighting effects, and performance optimization that define today’s games are made possible by advanced rendering engines, GPU power, and real-time simulation techniques. For game developers and enthusiasts, understanding how these technologies work is key to appreciating the craftsmanship behind every visual frame.

The Role of Graphics in Game Development

Graphics are the heart of any video game. They determine how immersive, realistic, or stylized a game appears to its audience. Whether it’s the life-like environments of Red Dead Redemption 2 or the vibrant world of Fortnite, the graphical quality significantly influences a player’s engagement and emotional connection with the game.

In the past, gaming graphics were limited by hardware constraints and basic pixel-based systems. However, with the advancement of 3D modeling, real-time rendering, and GPU acceleration, the industry has reached a point where games can rival cinematic experiences. Today, developers leverage advanced rendering pipelines and artificial intelligence-driven graphics to create worlds that feel alive and interactive.

Evolution of Rendering Technologies

Rendering technology has come a long way since the early days of 2D sprites and raster graphics. The evolution of rendering techniques has made it possible to simulate realistic light behavior, shadows, reflections, and materials in real time.

One of the most significant leaps in gaming visuals came with the introduction of 3D graphics. Early engines like id Tech and Unreal paved the way for fully immersive 3D environments. Later, advancements in shaders, ray tracing, and global illumination transformed the quality of visual storytelling.

Rendering now relies heavily on specialized graphics hardware (GPUs) that perform complex calculations to display millions of polygons per second. Modern graphics APIs like DirectX 12, Vulkan, and OpenGL enable developers to fully utilize this power while maintaining performance efficiency.

Key Graphics and Rendering Technologies Used in Games

1. Rasterization

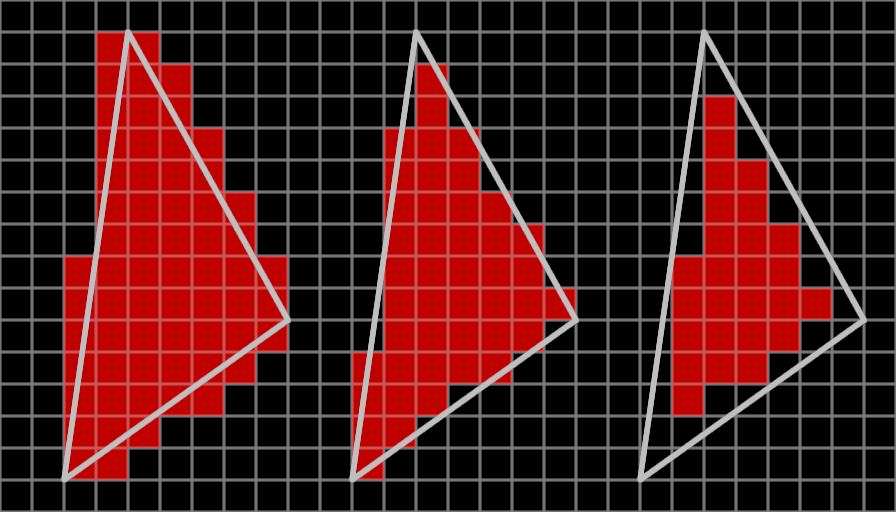

Rasterization is one of the most widely used techniques in real-time rendering. It converts 3D models into 2D images that can be displayed on the screen. Every 3D object is broken down into triangles, and each triangle is mapped with textures and lighting to produce the final frame. Although rasterization is fast, it struggles to accurately replicate complex light interactions, leading developers to integrate advanced lighting systems to bridge the gap between realism and performance.

2. Ray Tracing

Ray tracing is a revolutionary rendering method that simulates how light interacts with objects in a scene. Instead of approximating light behavior, ray tracing calculates each ray’s path from the camera to the light source, producing incredibly realistic reflections, refractions, and shadows. This technique, once exclusive to Hollywood visual effects, has now become a part of gaming thanks to powerful GPUs like NVIDIA’s RTX series and AMD’s RDNA architecture.

Games like Cyberpunk 2077, Battlefield V, and Control showcase the impressive results of real-time ray tracing. However, ray tracing requires immense computational power, which is why hybrid systems that combine rasterization and ray tracing are commonly used.

3. Global Illumination (GI)

Global illumination refers to the simulation of how light bounces off surfaces and indirectly illuminates other objects in a scene. This process adds depth and realism by accounting for subtle lighting effects such as color bleeding and ambient reflection. Techniques like baked GI, real-time GI, and screen-space global illumination (SSGI) are used to achieve high-quality lighting with manageable performance costs.

4. Physically Based Rendering (PBR)

Physically Based Rendering has become a cornerstone of modern game visuals. PBR ensures that materials react to light in ways consistent with real-world physics. For instance, metal surfaces reflect light differently from wood or fabric. By using mathematical models for light interaction, developers can achieve consistent visual results across different lighting conditions.

Engines like Unreal Engine, Unity, and CryEngine use PBR as a standard, allowing artists to focus on creativity without worrying about inconsistent lighting or texture realism.

5. Tessellation and Level of Detail (LOD)

Tessellation is a process where polygons are dynamically subdivided to add finer details to 3D models as players move closer to them. This allows for realistic terrains, detailed character models, and smoother surfaces. Level of Detail (LOD) complements tessellation by reducing polygon counts for distant objects, optimizing performance without sacrificing quality.

Together, these technologies ensure that games maintain visual fidelity while running efficiently across various hardware configurations.

6. Volumetric Effects

Volumetric rendering brings realism to atmospheric elements such as fog, smoke, clouds, and light shafts. These effects simulate how light scatters through air and particles, adding mood and depth to a scene. For example, the sunlight filtering through trees in Horizon Forbidden West or the misty environments in Resident Evil Village are products of advanced volumetric rendering.

7. Post-Processing and Shaders

Post-processing is the final stage of rendering where visual effects are applied to enhance the image. Techniques like bloom, motion blur, depth of field, and ambient occlusion contribute to cinematic presentation. Shaders—small programs that control how pixels and vertices are rendered—play a crucial role in this process, enabling unique visual styles and effects across different games.

Game Engines and Their Rendering Capabilities

Modern game engines are the backbone of game development, providing built-in tools and rendering pipelines. Unreal Engine 5, with its Lumen and Nanite technologies, allows real-time global illumination and high-density geometric detail without manual optimization. Unity remains a favorite among indie developers for its flexible rendering system and cross-platform capabilities. CryEngine, Frostbite, and Amazon’s Lumberyard also offer advanced graphical features tailored to AAA production.

Artificial Intelligence in Graphics Rendering

AI-driven graphics have become a transformative force in rendering technology. Machine learning techniques like NVIDIA DLSS (Deep Learning Super Sampling) and AMD FSR (FidelityFX Super Resolution) use AI to upscale lower-resolution frames, providing high-quality visuals with less processing power. These methods enable smoother gameplay while maintaining near-native 4K resolution quality.

AI is also being integrated into texture generation, animation, and lighting prediction, reducing manual labor and improving development efficiency.

The Future of Graphics and Rendering

The future of game graphics is driven by real-time ray tracing, neural rendering, and cloud-based rendering technologies. As hardware becomes more powerful, developers will have greater freedom to create vast, detailed, and dynamic worlds without compromising performance. The integration of virtual reality (VR) and augmented reality (AR) will further push the boundaries of immersion, demanding even more advanced rendering solutions.

With technologies like Unreal Engine 5 and advancements in GPU architecture, the line between reality and virtual worlds continues to blur. Players can expect increasingly lifelike characters, seamless open worlds, and interactive environments that respond intuitively to their actions.